How to install open-webui for llama model on Fedora?

31/12/2024

@ Saigon

LLM

This guide is about installing open-webui on fedora.

1. Update python & pip

$ dnf update python -y

$ pip install --upgrade pip2. Install open-webui guide

- Create directory

/opt/open-webui

$ mkdir /opt/open-webui

$ cd /opt/open-webui- change ownership to your user, do not run with root due to security issue.

$ chown nguyenvinhlinh:nguyenvinhlinh -Rv /opt/open-webui/- Setup virtual environment (

venv) & installopen-webui(do not run as root)

$ python -m venv open-webui-env

$ source open-webui-env/bin/activate

$ pip install open-webui3. Start open-webui server (do not run as root)

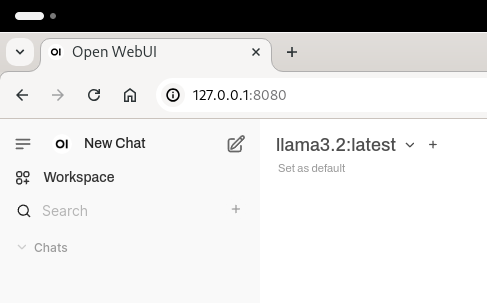

$ open-webui serve- Server should be available on http://127.0.0.1:8080

- All data (sqlite, uploads, cache, db) can be found at

/opt/open-webui/open-webui-env/lib/python3.12/site-packages/open_webui/data

4. Install Ollama software guide

$ curl -fsSL https://ollama.com/install.sh | sh

$ ollama --version5. Using ollama to pull/download model

$ ollama pull llama3.26. Running with systemd service /etc/systemd/system/open-webui.service

[Unit]

Description=Open WebUI (LLAMA)

After=network.target

[Service]

WorkingDirectory=/opt/open-webui

ExecStart=/opt/open-webui/open-webui-env/bin/open-webui serve

User=nguyenvinhlinh

RemainAfterExit=yes

Restart=on-failure

RestartSec=10

[Install]

WantedBy=multi-user.targetEnable and start open-webui service

$ systemctl enable open-webui

$ systemctl start open-webuiReferences

- Github Open-WebUI, https://github.com/open-webui/open-webui

- Ollama, https://ollama.com/

- How to enable a virtualenv in a systemd service unit?, https://stackoverflow.com/questions/37211115/how-to-enable-a-virtualenv-in-a-systemd-service-unit